AI Governance & Data Ownership in Multimodal LLMs

-

![Avatar]() By arthurcaldera

By arthurcaldera

- October 04, 2025

- 9 min read

- 0 Comments

Artificial intelligence is no longer just about text analysis. Today's multimodal large language models can process text, images, videos, and audio at the same time, emulating human communication in ways we've never seen before. But with this incredible power comes a massive question that's keeping regulators, developers, and users up at night: who owns the data these systems learn from and how do we protect everyone's privacy?

As we work our way through 2025, this isn't just a technical problem. It's a fundamental challenge that will shape the integration of AI in our society, from healthcare to finance to everyday consumer applications.

The Magnitude of the Privacy Challenge

Here's the uncomfortable truth: AI arguably has a larger data privacy risk than any technological advancement that we've seen before. The volume of information involved is staggering. These systems regularly train on terabytes or petabytes of data, such as healthcare data, social media data, financial data, and biometric data for facial recognition.

When you're dealing with that much data, the odds are good that some of it is deeply personal and sensitive stuff. And with more data being collected, stored and transmitted than ever before, the opportunities for exposure or misuse increase exponentially.

A Stanford University researcher put it plainly: ten years ago, people considered data privacy in terms of online shopping preferences. Now, we're seeing ubiquitous data collection that is training AI systems that have the potential to affect civil rights across society. That's a fundamentally different ballgame.

What Is Different about Multimodal Models

Multimodal large language models possess features that differentiate them from previous AI systems. They can accept various kinds of data inputs such as text, videos, and images, and create a variety of outputs that are not restricted to the input type. They're unique in their ability to perform tasks they weren't programmed to perform.

This flexibility makes them incredibly powerful. It also makes them unbelievably complex from a governance perspective. When a system can process and combine different types of data in unexpected ways, keeping track of data ownership and making sure data is private is exponentially harder.

The World Health Organization released comprehensive guidance acknowledging this challenge, with more than 40 recommendations for governments, technology companies and service providers to ensure appropriate use of these models. The guidance came with a stark warning: these technologies have been adopted faster than any consumer application in history, with platforms like ChatGPT, Bard and Bert entering public consciousness almost overnight.

The Core Privacy Risks

Multiple privacy issues arise when we look at how these systems work:

Unconsented Data Collection: Controversy erupts regularly when data is collected without express consent or knowledge. Recently, LinkedIn came under fire when users got upset that they were automatically opted in to allow their data to be used to train generative AI machines. Many had no idea this was going on.

Even more troubling are cases where data shared for one purpose gets repurposed for AI training. A California surgical patient reportedly discovered medical photos from her treatment, which she'd consented to for medical purposes only, had ended up in an AI training dataset. That's not just a privacy violation. It's an elementary violation of trust.

Surveillance Amplification: AI doesn't create surveillance concerns from scratch, but it amplifies them dramatically. When AI models are used to analyze surveillance data, the results can be harmful, particularly if they reveal bias. And multiple instances of wrongful arrests of people of color have been linked to AI-powered decision-making in law enforcement, underscoring the impact that privacy violations can have in the real world.

Data Breaches and Leakage: AI models are full of sensitive data, which makes them a prime target for attackers. Through techniques such as prompt injection attacks, hackers can trick systems into releasing private information. In one well-publicized incident, ChatGPT revealed to some users which users they were chatting to, by showing the title of the conversation histories of other users, demonstrating how even sophisticated systems can accidentally leak data.

Inadequate Security Measures: Risk isn't only from external attacks. Sometimes AI systems simply don't have proper security measures in place to protect the sensitive data they hold. A healthcare company developing an in-house diagnostic app may accidentally expose customer data to other users by certain prompts. Even unintentional sharing of data can lead to serious breaches of privacy.

The Regulatory Response

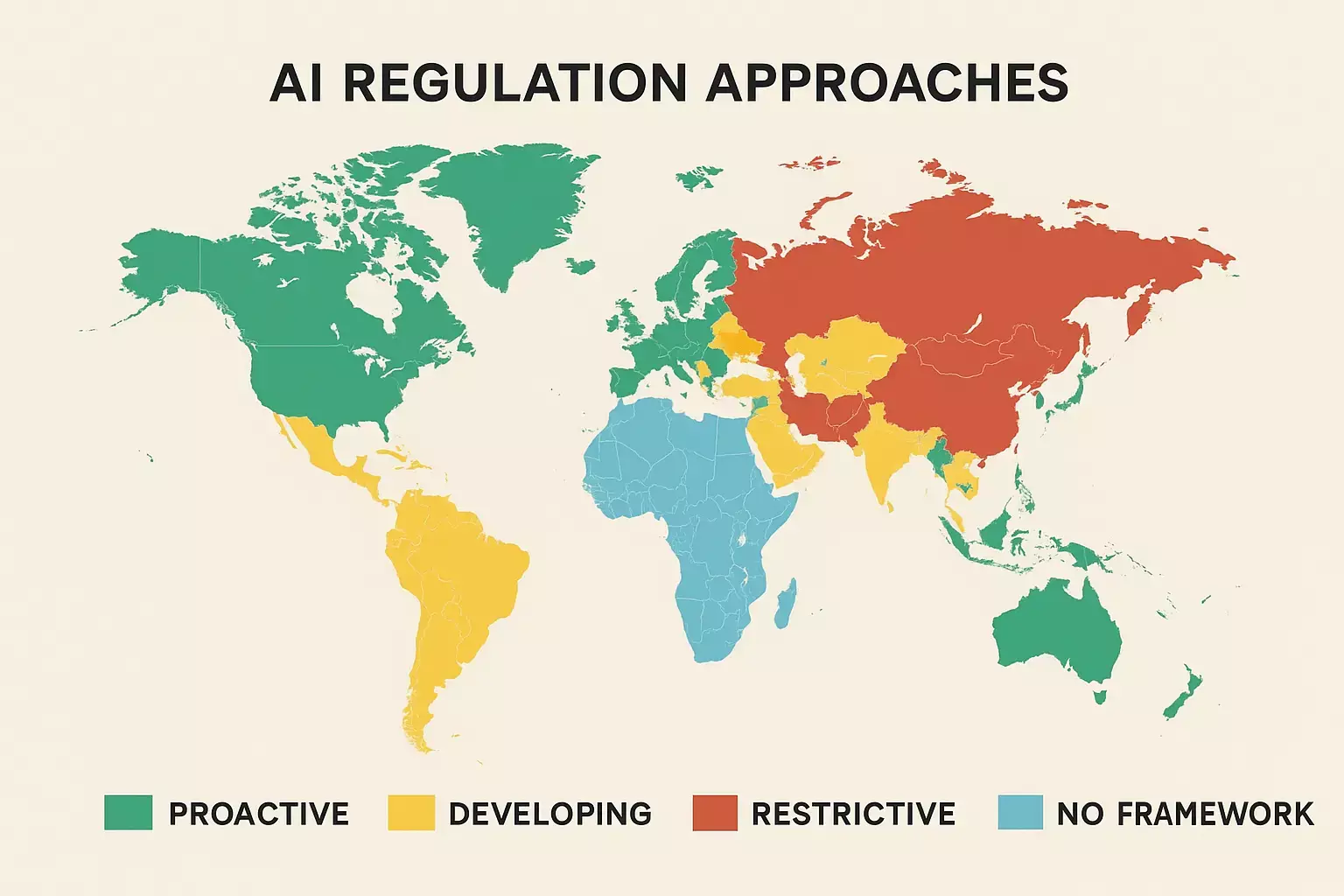

Policymakers around the world are scrambling to meet these challenges, though approaches differ considerably depending on the region.

European Leadership: The EU's General Data Protection Regulation establishes rigorous principles for the processing of personal data, such as purpose limitation, data minimization, and storage limitation. The game-changing EU AI Act goes further, becoming the world's first comprehensive regulatory framework for AI. It bans some uses of AI outright and imposes strict governance requirements on high-risk systems.

United States Approach: America has followed more of a fragmented approach. Multiple states have implemented their own data privacy laws, such as California's Consumer Privacy Act and the Texas Data Privacy and Security Act. Utah was the first state to pass a major statute specifically addressing the use of AI with their 2024 Artificial Intelligence and Policy Act.

At the federal level, the White House Office of Science and Technology Policy released a "Blueprint for an AI Bill of Rights," although it is also nonbinding. The framework urges AI professionals to ask for people's consent for using their data, and outlines five principles:

China's Regulations: China is one of the first countries to implement specific AI regulations. Its 2023 Interim Measures for the Administration of Generative Artificial Intelligence Services states that generative AI services must respect legitimate rights and not infringe upon the right to portrait rights, reputation rights, privacy rights and personal information rights.

Data Ownership: The Key Question

Beyond privacy concerns is a more fundamental issue, who does the data that trains these models really belong to?

This question doesn't have a simple answer. When you share a picture on social media, do you still own it? What about when an AI company scrapes that photo to train their model? What happens when the AI then creates new images based on your original photo? The ownership chain gets murky quickly.

Current regulations tell some of the story. Under GDPR, individuals have a number of rights over their personal data, including the right to access, correct and delete data. But how do you delete your data from an AI model that's already been trained on it? The model has already learned patterns from your information. Simply deleting the original data doesn't erase that learning.

This creates a tension between individual data rights and the practical realities of how AI systems work. It's a tension regulators, developers and legal experts are still trying to sort out.

Building Better Governance Frameworks

The World Health Organization's guidance offers a roadmap for addressing these challenges. Key recommendations include:

For Governments: Invest in infrastructure for the public (computing power and public datasets) that require adherence to ethical principles. Use laws and policies to enforce ethical obligations and human rights standards in AI applications. Assign regulatory agencies for assessing and approving models for critical uses. Initiate mandatory post-release auditing and impact assessments by independent third parties.

For Developers: Make sure models are not designed just by engineers and scientists. Involve potential users and stakeholders from the initial stages of development. Design systems to perform well-defined tasks to required accuracy and reliability Be able to predicting and understanding possible secondary outcomes

Practical Steps for Organizations

Organizations implementing AI systems can take tangible steps to safeguard privacy and define clear data ownership:

Privacy by Design: Evaluate and mitigate privacy risks across the entire AI development lifecycle. Don't treat privacy as an afterthought. Build it into the system from the beginning.

Data Minimization: Collect only what can be collected in a legal way and use data in a way consistent with people's expectations. Establish clear timelines for how long data will be stored with the aim and goal of eliminating data as soon as it's no longer needed.

Transparency and Control: Offer transparency and control over data, including mechanisms for consent, access, and control. If use case changes, reacquire consent Don't assume that previous consent covers new purposes.

Improved Security: Implement security best practices such as cryptography, anonymization, and access control mechanisms. Protect not only data, but also metadata.

Sensitive Domain Protections: Provide additional protections for data from health, employment, education, criminal justice, and personal finance. Take particular care: Treat data from or about children

Accountability Mechanisms: Respond to requests from individuals to find out what of their data is being used. Be proactive with summary reports regarding data use, access, and storage. Report security lapses or breaches as soon as possible.

The Road Ahead

As we enter deeper into 2025, the discussion regarding AI governance and data ownership continues to evolve. We're beyond the explorative stage. These systems are being deployed on a large scale, processing large amounts of data, and making decisions that impact people's lives.

The difficulty is not whether to use these powerful tools. Organizations are already using AI models to increase productivity and unlock value. 96% of organizations surveyed are already using AI in some form or another. The challenge is doing so responsibly, and with clear governance frameworks, robust privacy protections, and respect for data ownership rights.

Emerging technology solutions are assisting organizations to keep up with the changes in regulations. Automated tools can now be used to identify regulatory updates and translate them into enforceable policies. Data governance platforms help businesses track data lineage, manage consent, and audit AI systems for compliance.

But technology alone won't solve this challenge. It requires sustained collaboration between policymakers, developers, organizations, and civil society. It requires being transparent about how systems work, having clear lines of accountability when things go wrong, and a real commitment to putting human rights and dignity at the center of AI development.

The Bottom Line

Multimodal AI systems are an incredible advance in technology. They have the ability to improve healthcare, increase the education, speed up scientific research and solve complex problems we couldn't before. But realizing this potential requires to answer the fundamental questions of data ownership, privacy, head-on.

We can't just take this huge amount of data, throw it into data models that are increasingly sophisticated, and hope that the privacy issues will somehow resolve themselves. They won't. Without clear governance frameworks, strong protections and genuine respect for data ownership rights, we risk the development of systems that undermine the very trust and social fabric they're supposed to serve.

The good news is that there are frameworks. Regulations are emerging. Best practices are being created. Organizations that engage with these challenges seriously, that build privacy and ethical governance into their AI systems from the ground up, won't just avoid regulatory trouble. They're going to build systems that people actually trust, and people actually want to use.

That's what the real promise of AI governance is: not just preventing harm but creating technology that is truly in the best interests of humanity. And that begins with taking data ownership and privacy seriously from the get-go.

-

1

-

0

-

0

-

0

-

0

-

0

-

0

-

0

- Previous Article Tokenization: The New Standard for Institutional Assets

- Next Article Perplexity AI Browser Comet Now Free for All Users