Humanoid Robot Security Risks Exposed by Researchers

-

![Avatar]() By arthurcaldera

By arthurcaldera

- October 04, 2025

- 5 min read

- 0 Comments

The world of humanoid robots just got a lot more complicated. While companies like Tesla and Boston Dynamics grab headlines with their flashy prototypes, a cheaper alternative has been quietly making its way into research labs, universities and even some police departments. But according to the new research, this affordability comes with a serious catch.

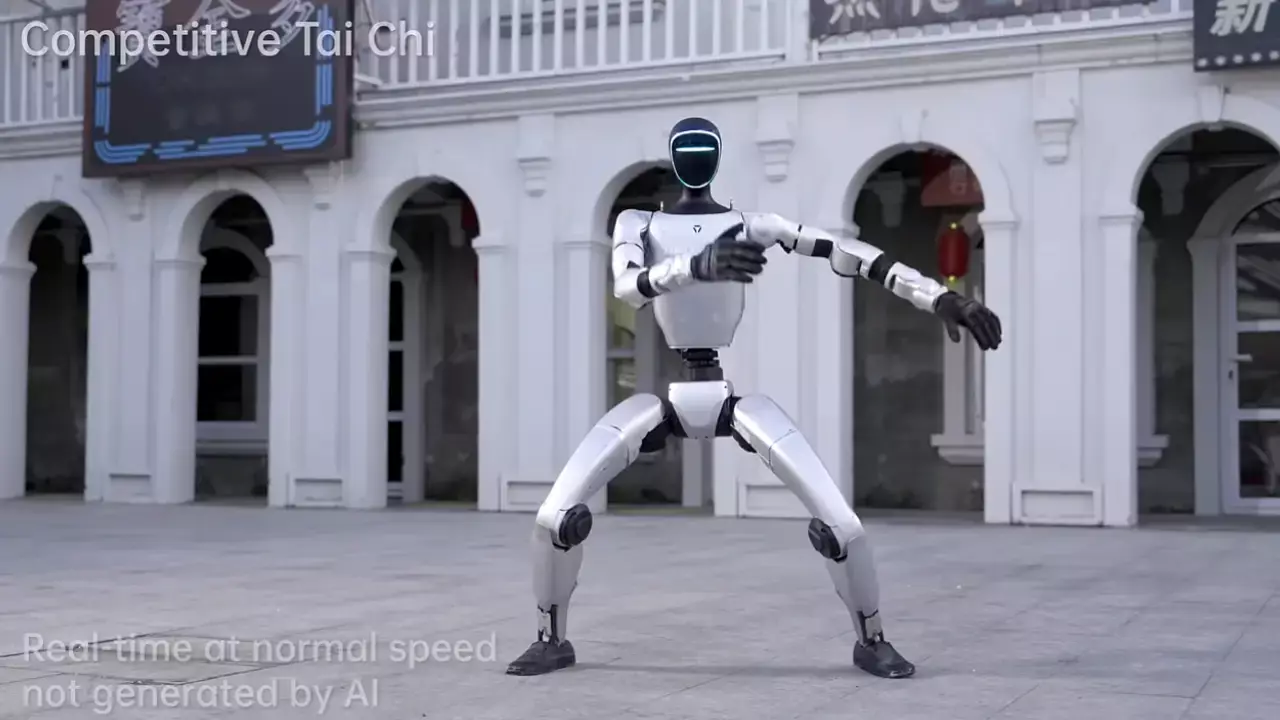

Security researchers at Alias Robotics recently published an in-depth analysis of the Unitree G1, a four-foot-tall humanoid robot that costs in the neighborhood of $16,000. What they found raises some very uncomfortable questions about what happens when robots become accessible and vulnerable at the same time.

Image soruce: https://www.youtube.com/watch?v=Nkh6RUocD8c

The Trouble With Being Affordable

The Unitree G1 has become something of a workhorse in robotics research. At about $16,000, it's inexpensive enough for use by universities, robotics clubs, and startups that can simply buy it off the shelf. You'll find these machines learning to walk up the stairs, grabbing boxes and engaging with people in labs from Beijing to Boston.

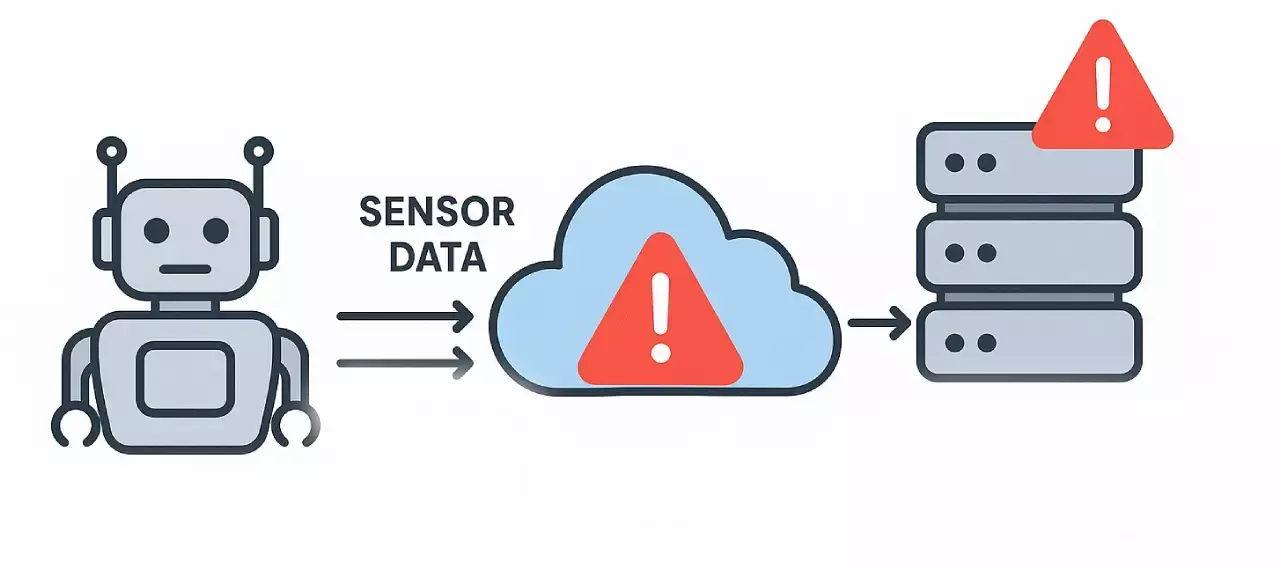

But here's where things can get interesting. Researchers discovered that the G1 transmits audio, video and spatial data without alerting users, and its proprietary encryption utilizes hardcoded keys that enable decryption of data even offline. In other words, the robot is continuously acquiring and sending out information, and the security to protect the data isn't nearly as strong as it should be.

Victor Mayoral-Vilches, author of the study, explained the severity of the situation. Because it's a mobile, human-scale sensor, a humanoid robot doesn't just observe. Its cameras, microphones and sensors enable it to see, hear, map and physically interact with the spaces and people around it. This makes routine data collection much more invasive.

What the Research Revealed

The investigation found no evidence of privacy policies, data collection disclosures, user consent mechanisms, or the option of opting out of local-only operation. Even worse, the robot gives no indication if it is recording or transmitting information. You wouldn't know if it was watching or listening.

The technical details paint an even more disturbing picture. Analysis showed over 40 active data streams ready for transmission and the robot's encryption used static, hardcoded keys instead of randomized ones. This design flaw enabled researchers to decrypt configuration files and firmware without accessing them remotely or resorting to brute force attacks.

But the researchers did not stop at finding weaknesses. They created a customized AI agent on the robot itself to simulate scanning its environment, mapping local networks and preparing actions against the manufacturer's infrastructure. The test showed how a consumer-grade humanoid could potentially be converted into a form of offensive cyber weapon.

Not Everyone Agrees on How High the Risk Is

It's worth noting that not everyone considers these findings extraordinary. Jan Liphardt, CEO of OpenMind, contended that because most connected machines such as cars, baby monitors and cell phones already connect to servers for updates and share similar vulnerabilities. His point is that it's not about the problems themselves so much as finding, recording, and correcting these things.

He also noted that the open design of the G1 is reflective of its purpose as a research platform, with chest plates held in place with Velcro to make it easy for students to swap out parts. From this point of view, of course, the robot was never intended to be a safe consumer-ready product.

However, Mayoral-Vilches rejected this view. He noted that such systems are in development and testing today, and that they are setting architectural patterns and security practices that will remain as the technology matures and gets deployed over the next few years. The Robot Operating System (ROS) was born in education laboratories before being adopted as the backbone of the contemporary robotics industry and security problems from those early days still cause troubles.

This Isn't the First Warning

Earlier this year, researchers found an undocumented remote access backdoor in Unitree's Go1 robot dog that gave third parties access to camera feed and control. This pattern indicates that the security problems aren't isolated incidents and may indicate larger issues in the company's approach to cybersecurity.

The research also emphasized that the G1 is built on aging middleware with support windows that have already closed, thus unpatched vulnerabilities may wait. Combined with weak secure-boot implementation and exposed hardware ports and things get less and less clear.

Why This Matters Now

The timing of these findings is important. Goldman Sachs projects the humanoid robotics industry could be worth $38 billion dollars by 2035, with companies scrambling to bring their machines to market and bring technology to life. Goldman Sachs market analysis demonstrates just how fast this industry is on the rise.

The study warned that as these machines proliferate, so do their flaws. A single vulnerable robot may be manageable, but thousands of them, with cameras, microphones, and the ability to move through physical spaces, means a whole different level of risk.

There are also legal implications to consider. The G1's persistent telemetry transmission could get it into trouble with data protection laws such as the EU's GDPR, depending on where and how it's used. Organizations running these robots could be in breach of privacy regulations without even realizing it.

The Bigger Picture

What makes this situation especially tricky is that there are also a number of converging trends. Humanoid robots are becoming cheaper and more capable. They're making their way out of controlled lab environments and into the real world. And they're being built with the same sort of connectivity that makes our phones and computers useful but also vulnerable.

The researchers put it bluntly: "The convergence of physical presence, connectivity, and autonomy makes for a threat surface only AI can successfully defend against, making Cybersecurity AIs vital infrastructure instead of optional add-ons".

For now, the G1 is largely a laboratory curiosity. But as prices continue to drop and adoption grows, these questions about security won't go away. If anything, they'll become more urgent. The challenge facing the robotics industry isn't simply creating humanoid robots that can walk, talk and work alongside us. It's ensuring that we can actually trust them when they do.

The full technical report is available for those interested in the details. Alias Robotics technical study is an in-depth look at the vulnerabilities and testing methodologies used in the research.

As humanoid robots continue their march from science fiction into reality, one thing becomes clear: the conversation about their security can't wait until after they're already walking among us.

-

0

-

0

-

0

-

0

-

0

-

0

-

0

-

0

- Previous Article Intel Stock Surges 50% After Major Nvidia Partnership

- Next Article 11 Best Cheap Web Hosting Services That Are Fast